Visual Technology Tutorial, Part 8: Streaming

This is Part 8 of a video processing technology training series extracted from RGB Spectrum's Design Guide.

Streaming Video and Compression

Conceptually, streaming refers to the delivery of a steady “stream” of media content that’s been digitized for transmission over an IP network. Streamed content can consist of prerecorded data or real-time video, like camera feeds. What complicates streaming is that most data networks were designed for the transmission and delivery of data, rather than high-quality audio and video.

Bandwidth is a measure of the capacity of a network connection. While private internal networks may not suffer from bandwidth constraints, the public internet can be limited by bandwidth capacity, although that issue is improving. Fast Ethernet (up to 100 Mbps) has been replaced by Gigabit Ethernet (up to 1 Gbps) while 10 Gigabit and 40 Gigabit networks are not uncommon in many Network Operation Centers. However, because even relatively low resolution SVGA video (800x600) can require nearly 1 Gbps, data rates for the streaming delivery of HD video still exceed the bandwidth capacity of most network connections. For this reason, streaming of both high-resolution computer graphics and full-motion HD video over networks requires compression.

Video compression uses coding techniques to reduce redundancy within successive frames. There are two basic techniques used in the processing of video compression: spatial compression and temporal compression, although many compression algorithms employ both techniques. Spatial compression involves reordering or removing information to reduce file size. Spatial (or intraframe) compression is applied to each individual frame of the video, compressing pixel information as though it were a still image.

Temporal (or interframe) compression, as the name suggests, operates across time. It compares one still frame with an adjoining frame and, instead of saving all the information about each frame into the digital video file, only saves information about the differences between frames (frame differencing). This type of compression relies on the presence of periodic key frames, called “inter” or “I” frames. At each key frame, the entire still image is saved, and these complete pictures are used as the comparison frames for frame differencing. Temporal compression works best with video content that doesn’t have a lot of motion (for example, talking heads).

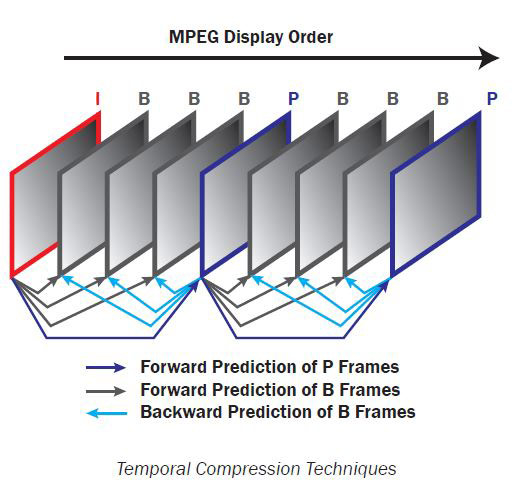

The figure below illustrates the scheme used in temporal compression. In addition to the “I” frame explained above, “P” or predictive and “B” or bi-predictive frames are used to include data from the previous and next frames. The use of “B” frames is optional.

The Motion Pictures Experts Group (MPEG) Compression technique uses a Group of Pictures (GOP) to determine how many “I”, “P”, and “B” frames are used. A GOP size of one means that a each compressed frame will consist of a single I frame. This results in a lower latency but requires more bandwidth since less compression is used.

For still-image compression, there are two widely-used standards, both developed by the Joint Photographic Experts Group (JPEG). The original standard is known by the same name as the developing organization — JPEG — and it uses a spatial compression algorithm. A more recent standard, JPEG2000, uses a more efficient coding process. Both JPEG and JPEG2000 can also be used for coding motion video by encoding each frame separately.

Unicast and Multicast

There are two basic streaming architectures: unicast and multicast. Unicast streaming involves a one-to-one (point-to-point) connection between a server and a client; each client gets a unique data stream, and only those clients that request the stream will receive it. Unicast streaming works either for live streaming or on-demand streaming. The number of participants is limited by the bit rate of the video content being streamed, the speed of the server, and the bandwidth of the network conduit.

Multicast streaming involves a one-to-many relationship between the codec and the clients receiving the stream; all clients receive the same stream by subscribing to a designated multicast IP address. A virtually unlimited number of users can connect to a multicast stream. Because a single data stream is delivered simultaneously to multiple recipients, it reduces the server/network resources that would be needed to send out duplicate data streams. A network must be properly configured for multicast streaming, however. Depending on a network’s infrastructure and type, multicast transmission may not be a feasible option.

H.264 Profiles

In 2003, the MPEG completed the first version of a compression standard known as MPEG-4 Part 10, or H.264. Like other standards in the MPEG family, it uses temporal compression. Although H.264 is not the only compression method, it has become the most commonly used format for recording, compression, and streaming high definition video, and it is the method used to encode content on Blu-ray discs.

H.264 is a “family” of standards that includes a number of different sets of capabilities, or “profiles.” All of these profiles rely heavily on temporal compression and motion prediction to reduce frame count. The three most commonly applied profiles are Baseline, Main, and High. Each of these profiles defines the specific encoding techniques and algorithms used to compress files.

Baseline Profile

This is the simplest profile used mostly for low-power, low cost devices, including some videoconferencing and mobile applications. Baseline profiles can achieve a compression ratio of about 1000:1 — i.e. a stream of 1 Gbps can be compressed to about 1 Mbps. They uses 4:2:0 chrominance sampling, which means that color information is sampled at half the vertical and half the horizontal resolution of the black and white information. Other important features of the Baseline Profile are the use of Universal Variable Length Coding (UVLC) and Context Adaptive Variable Length Coding (CAVLC) entropy coding techniques.

Main Profile

Main Profile includes all of the functionality of Baseline, but with improvements to frame prediction algorithms. It is used for SD digital TV broadcasts that use the MPEG-4 format, but not for HD broadcasts.

High Profile

H.264 High Profile is the most efficient and powerful profile in the H.264 family, and is the primary profile for broadcast and disc storage, particularly for HDTV and Bluray disc storage formats. It can achieve a compression ratio of about 2000:1. The High Profile also uses an adaptive transform that can select between 4x4 or 8x8-pixel blocks. For example, 4x4 blocks are used for portions of the picture that are dense with detail, while portions that have little detail are compressed using 8x8 blocks. The result is the preservation of video image quality while reducing network bandwidth requirements by up to 50 percent. By applying H.264 High Profile compression, a 1 Gbps stream can be compressed to about 512 Kbps.

In the H.264 standard, there are a number of different “levels” which specify constraints indicating a degree of required decoder performance for a profile. In practice, levels specify the maximum data rate and video resolution that a device can play back.

For example, a level of support within a profile will specify the maximum picture resolution, frame rate, and bit rate that a decoder may be capable of using. Lower levels mean lower resolutions, lower allowed maximum bitrates, and smaller memory requirements for storing reference frames. A decoder that conforms to a given level is required to be capable of decoding all bit streams that are encoded for that level and for all lower levels.

H.265 Standard

The High Efficiency Video Coding (HEVC) protocol, also known as H.265, was developed as a successor to H.264 by the Joint Collaborative Team on Video Coding (JCT-VC). The H.265 protocol reduces the bit rate required for streaming video when compared to H.264 while maintaining comparable video quality.

Due to the complexity of this new encoding/decoding protocol, more advanced processing is required. However, advantages such as lower latency can be realized with the H.265 protocol.

Some manufacturers, such as RGB Spectrum, take advantage of both H.264 and H.265 streaming protocols. Zio decoders, for example, can decode both H.264 and H.265 streams. This allows the customer to use whichever protocol is appropriate for the application.

Latency

When a digital video source is directly connected to a display using an interface such as DVI, video is easily passed without the need for compression. Transmission is virtually instantaneous, because no extra image signal processing is involved. But when compression techniques are used to make data streams more compatible with network bandwidth constraints, the processing takes a certain amount of time, known as latency. Latency is one of the key considerations for evaluating streaming products, processes, and applications.

Protocols

Many protocols exist for use in streaming media over networks. These protocols balance the trade-offs between reliable delivery, latency, and bandwidth requirements, and the selection of a set of protocols depends on the specific application.

One of the most common is Real-time Transport Protocol (RTP), which defines a standardized packet format for delivering audio and video over IP networks. RTP is often used in conjunction with Real-time Transport Control Protocol (RTCP), which monitors transmission statistics and quality of service (QoS) and aids synchronization of multiple streams. Both protocols work independently of the underlying Transport layer and Network layer protocols. A third protocol, Real Time Streaming Protocol (RTSP) is an Application layer protocol that is used for establishing and controlling real-time media sessions between end points, and that uses common transport commands like start, stop, and pause.

Generally, RTP runs on top of the User Datagram Protocol (UDP), a Transport-layer protocol that can deliver live-streaming with low latency, but without error-checking. RTP is generally used for multi-point, multicast streaming.

Компания RGB Spectrum — ведущий разработчик и производитель критически важных аудиовизуальных решений в режиме реального времени для гражданских, правительственных и военных организаций. Компания предлагает интегрированное оборудование, программное обеспечение и системы управления для реализации самых высоких стандартов. С 1987 года RGB Spectrum помогает своим клиентам принимать лучшие решения.Better Decisions. Faster.™